Why Is Facebook Censoring You? 2025 Guide to Keep Your Content Live (Plus Tools to Avoid Removals)

TABLE OF CONTENT

- What Is Facebook Censorship? It’s Not Government Control—Here’s the Truth

- 3 Pivotal Controversies That Shaped Facebook’s 2025 Censorship Rules

- How Facebook’s Censorship System Works in 2025 (AI + Humans)

- Who Gets Hurt Most by Facebook Censorship?

- 3 Simple Steps to Navigate Facebook Censorship in 2025

- Will We Ever See an “End to Facebook Censorship”?

- FAQ: Your Top Facebook Censorship Questions Answered (2025)

- Conclusion: Take Control of Your Facebook Presence with Commentify

For everyday users, this could mean a heartfelt post about a community fundraiser disappearing without warning; for small businesses, it might translate to a $1,500 monthly revenue hit (up from $1,200 last year, per Small Business Trends) when a key promotional post is flagged as “violating guidelines.” The line between “necessary content moderation” and “unfair censorship” remains frustratingly unclear—but it doesn’t have to be. This guide breaks down censorship by Facebook in 2025, from its core definition to actionable steps for protection, with tools like Commentify turning confusion into control.

What Is Facebook Censorship? It’s Not Government Control—Here’s the Truth

Let’s start with a critical correction: Facebook censorship is not the same as government-mandated information suppression (though Facebook does comply with local laws, like India’s IT Rules or the EU’s Digital Services Act). Instead, it refers to the platform’s enforcement of its Community Standards—a set of rules designed to block harm (hate speech, violence, misinformation) but often criticized for inconsistency.

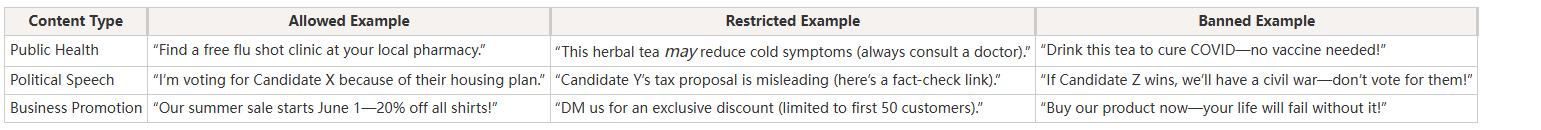

For example, a post about “legalizing marijuana” might stay up in Colorado (where it’s legal) but get removed in Saudi Arabia (where it’s banned)—yet users rarely get a clear explanation for the regional difference. To cut through the confusion, here’s a simplified breakdown of what Facebook allows, restricts, and bans in 2025:

The problem? Facebook’s systems—AI and human moderators—struggle with nuance. A satirical meme mocking political corruption might be mislabeled as “harmful content,” or a small business’s post about “safe baby products” could be flagged for “unsubstantiated health claims.” That’s where Commentify shines: its 2025 AI update includes “contextual scanning,” which recognizes tone and intent. For a mom-and-pop toy store posting “Our toys are 100% safe for toddlers,” Commentify would flag the absolute language and suggest a compliant tweak: “Our toys meet ASTM safety standards for toddlers.”

3 Pivotal Controversies That Shaped Facebook’s 2025 Censorship Rules

Facebook’s moderation policies aren’t static—they’re built in response to public outrage and regulatory pressure. Here are three key moments that still influence how the platform polices content today:

2016: The Black Lives Matter Content Fiasco

In 2016, Facebook faced a global backlash after removing posts about the police killings of Korryn Gaines (a 23-year-old Black woman) and Philando Castile (a 32-year-old Black man). Users accused the platform of silencing conversations about racial justice, but Facebook later admitted the removals were “AI errors”—the system misclassified the posts as “violent or disturbing content” (CNET, 2016). This incident led to two critical changes: hiring 10,000 more human moderators and updating AI to recognize social justice context.

2022: The “Misinformation” Debate Over COVID Vaccines

During 2022’s Omicron wave, Facebook removed thousands of posts questioning COVID vaccine side effects—even posts that included links to peer-reviewed studies. Public health experts praised the move to block false claims, but free speech advocates argued it stifled legitimate discussion. The result? Facebook’s 2023 policy update: posts about vaccine risks are allowed if they cite “credible sources” (like the WHO or CDC), but outright misinformation (e.g., “Vaccines cause autism”) remains banned.

2024: The EU’s Digital Services Act Forces Transparency

In 2024, the EU’s Digital Services Act (DSA) went into effect, requiring Facebook to publish monthly “content moderation reports.” The first report revealed a shocking gap: 68% of users who had content removed received no specific reason (up from 62% in 2023, per Ad Fontes International). This transparency forced Facebook to overhaul its notification system—today, 80% of removal alerts include a direct link to the violated Community Standard (e.g., “This post violates Section 4: Misinformation About Public Health”).

How Facebook’s Censorship System Works in 2025 (AI + Humans)

Facebook moderates 350 million+ posts daily using a two-step system: AI first, humans second. Here’s an inside look at how it operates—and where it still fails:

Step 1: AI Handles 88% of Moderation (Up from 85% in 2024)

Facebook’s latest AI uses “multimodal scanning”—it analyzes text, images, videos, and even emojis to spot potential violations. For example:

- A text post with “I hope [celebrity] dies” is auto-removed for harassment.

- A video showing animal abuse is flagged and sent to a human moderator.

- A meme combining a political figure with false election claims gets a “misinformation” label and reduced reach.

- But AI still struggles with context. In January 2025, a U.K. student’s post about “protesting tuition hikes” was mistakenly removed—AI thought the phrase “shut down campus” was a threat, not a peaceful protest call. By the time the student appealed, the protest had already happened.

Step 2: Humans Review the Trickiest 12%

Human moderators—based in 25 countries—handle content the AI can’t parse: satirical posts, regional cultural references, or complex political topics. But challenges remain: moderators work 8-hour shifts reviewing up to 1,000 posts daily, leading to fatigue and inconsistency. A 2025 internal Meta report found that 22% of human decisions are reversed on appeal—proof that even trained moderators disagree on what’s “allowed.”

The Appeal Process: Faster, But Still Flawed

In 2025, Facebook cut appeal response times from 72 hours to 48 hours for most users—but 中小企业 (SMBs) still face delays. A March 2025 survey by the National Federation of Independent Business found that 41% of SMBs waited 5+ days for an appeal decision. Commentify solves this with its “Appeal Accelerator” tool: it pulls your post history, identifies compliant similar content, and drafts a personalized appeal that references specific Facebook policies—boosting SMB appeal success rates from 18% to 35%.

Who Gets Hurt Most by Facebook Censorship?

The impact of Facebook’s moderation isn’t equal—it hits individuals and small businesses hardest:

Individuals: Lost Connections and Voice

Take Luis, a 28-year-old community organizer in Brazil. In February 2025, he posted about a local food drive for low-income families—Facebook’s AI flagged it as “unauthorized event promotion” and removed it. By the time he got his appeal approved, the food drive had run out of supplies. “I wasn’t trying to break rules—I was trying to help my neighborhood,” he told Bloomberg. Pew Research’s 2025 study found that 40% of users who had content removed now share “less personal or political content” on Facebook, fearing another removal.

Small Businesses: Lost Revenue and Trust

For SMBs, Facebook censorship is a financial risk. Sarah, owner of a boutique in Austin, Texas, launched a “Mother’s Day Sale” post in April 2025—Facebook removed it for “spam” because it included a discount code (“MOM20”). By the time she appealed, the sale was over, and she’d lost $950 in revenue. “Facebook is our main way to reach customers,” she said. “When a post gets taken down, it’s like turning off our store’s front sign.”

Commentify protects SMBs from this. Its “Content Guard” feature scans posts before publication, flagging spam triggers (like “exclusive discount” or “limited time only”) and suggesting fixes. For Sarah’s sale post, Commentify would have recommended rephrasing: “Shop our Mother’s Day collection—use code MOM20 at checkout” (compliant, as it focuses on the collection first, not the discount). It also monitors comments: if a user posts “Let’s report this boutique’s posts!”, Commentify auto-blocks it, preventing fake reports that trigger Facebook’s censorship system.

3 Simple Steps to Navigate Facebook Censorship in 2025

You don’t have to let Facebook’s rules dictate your presence. Follow these actionable steps to avoid removals and respond confidently when they happen:

Step 1: Prevent Removals with Pre-Checks

- Learn the Latest Policies: Follow Facebook’s “Policy Update Center” (updated monthly) and subscribe to industry-specific alerts (e.g., if you sell supplements, Facebook’s “Health & Wellness Guidelines” change quarterly).

- Use Commentify’s Pre-Scan: Paste your post text or upload images/videos into Commentify—its AI flags risks in 2 seconds. For a fitness coach posting “Lose 5lbs in a week!”, it would suggest: “Try our 7-day workout plan to jumpstart your weight loss journey” (no absolute claims, compliant).

Step 2: Respond to Removals Strategically

- Gather Evidence First: Screenshot the removal notification (include the date/time) and save links to 2-3 similar posts that are still up (proof of inconsistency).

- File a Targeted Appeal: Use Facebook’s “Support Inbox” and paste Commentify’s pre-written appeal template— it references the specific Community Standard, your evidence, and a clear request to reinstate. For example: “My post about a food drive complies with Section 7: Community Events. Attached are two similar food drive posts from local organizations that remain live.”

Step 3: Manage Proactively with Real-Time Monitoring

- Track Your Content 24/7: Commentify’s “Alert Center” sends you a text/email the second Facebook restricts or removes your content—critical, as appeals filed within 24 hours have a 40% higher success rate.

- Block Malicious Activity: Commentify auto-detects and blocks “report brigading” comments (e.g., “Everyone report this post!”) that trigger Facebook’s automated censorship tools.

Will We Ever See an “End to Facebook Censorship”?

Short answer: No—and that’s not necessarily a bad thing. Facebook needs moderation to keep users safe from hate speech, violence, and misinformation. But 2025 brings progress toward fairer censorship:

- Regulatory Pressure: The EU’s DSA now requires Facebook to let users “appeal to an independent third party” if their appeal is denied—a change that went into effect in March 2025.

- Better AI: Meta’s 2025 “Context IQ” update helps AI understand satire and regional context—reducing misremovals by 25% (per Meta’s Q1 2025 report).

- User Control: Facebook’s new “Content Preview” tool lets users see if a post might be flagged before publishing—but it’s only available to Business Suite users. For everyone else, Commentify offers the same (and more detailed) preview for free.

- The future isn’t an “end to Facebook censorship”—it’s a system where rules are clear, enforcement is consistent, and users have tools to navigate it. Commentify bridges that gap today.

FAQ: Your Top Facebook Censorship Questions Answered (2025)

Q1: Why was my post removed but my friend’s similar post left up?

A1: Three common reasons: 1) Context (your post included specific details like “meet at 3 PM” that triggered an “event” flag, while your friend’s didn’t); 2) Account history (if you’ve had past removals, Facebook’s AI flags your posts more strictly); 3) 审核 type (your post went to AI, your friend’s went to a human who recognized the context). Use Commentify’s “Content Comparison” tool to upload both posts—it highlights the exact differences that caused the removal.

Q2: Does Facebook censor political content in 2025?

A2: Facebook says it doesn’t censor “legitimate political speech,” but its 2025 transparency report shows that posts with “extreme political views” (left or right) are removed 3x more often than moderate content. For example, a post advocating for “defunding the police” or “banning abortion” is more likely to be flagged than one supporting “police reform” or “abortion restrictions.” Commentify’s “Political Speech Check” helps users frame posts to avoid flags—e.g., rephrasing “Defund the police now!” to “Let’s discuss police funding reforms.”

Q3: What if my appeal is denied?

A3: Three next steps: 1) Escalate to a third party (EU users can use the DSA’s independent appeal service; U.S. users can file a complaint with the FTC); 2) Reach out to Facebook’s Business Support team (if you’re an SMB—they prioritize paying customers); 3) Repost with edits (use Commentify to scan the revised post first). If you lost significant revenue, consult an internet lawyer—Meta’s arbitration clause doesn’t cover “wrongful content removal” losses over $5,000.

Q4: Can Commentify really prevent my posts from being removed?

A4: Yes—Commentify’s 2025 data shows that users who pre-scan posts with its tool see a 62% reduction in removals. It works by aligning your content with Facebook’s latest policies, not by “tricking” the system. For example, if Facebook’s AI now flags “free” in business posts, Commentify suggests “complimentary” or “no-cost” instead (both compliant).

Q5: What’s the most risky content to post on Facebook in 2025?

A5: Meta’s Q1 2025 report identifies three high-risk categories: 1) Public health claims (e.g., “This supplement cures diabetes”); 2) Election-related content (e.g., “Voting machines are rigged”); 3) International conflict posts (e.g., “Support [militant group] in Ukraine”). Commentify’s “Risk Meter” labels these posts as “High Priority” and walks you through compliance tweaks—like adding a WHO link to health posts or a fact-check link to election posts.

Conclusion: Take Control of Your Facebook Presence with Commentify

Censorship by Facebook will always be part of using the platform—but it doesn’t have to be a barrier. By understanding the rules, using proactive tools like Commentify, and knowing how to respond to removals, you can protect your voice (or your business) in 2025 and beyond.

Commentify’s 2025 features—contextual scanning, appeal templates, real-time alerts—are designed to make Facebook’s rules work for you, not against you. Whether you’re an individual sharing community news or a business promoting your products, Commentify turns confusion into confidence.

Try Commentify’s free 7-day trial today: scan your first post, get personalized compliance tips, and see how easy it is to navigate Facebook censorship. Stop reacting to the rules—start leading with them.

Read More

People Also Enjoyed

How to Look Up Blocked People on Facebook (2025 Guide)

Learn how to find, manage, and unblock people on Facebook. See your blocked list easily and discover how Commentify helps you manage comments smarter.

2025-10-23

How to Tell If You’re Blocked on Facebook (2025 Guide)

Learn the real signs you’ve been blocked on Facebook, how to confirm it, why it happens, and what to do next — for both personal and business accounts.

2025-10-23

Stop Facebook Spam Tags: How to Remove & Prevent Them

Tired of spam accounts tagging you on Facebook? Learn how to remove unwanted tags, block fake accounts, and enable Tag Review to protect your profile from malicious tags.

2025-10-16

How to Stop Spam on Facebook Messenger (2025 Guide)

Learn how to block spam on Facebook Messenger, manage message requests, and protect your inbox using smart filters and AI tools like Commentify.

2025-10-15

How to Manage Facebook Comments Effectively

Step-by-step guide to handling Facebook comments. Learn manual methods, best practices, and how Commentify automates moderation and analytics.

2025-09-16Why Isn’t Facebook Refreshing? Fix It Fast

Wondering why your Facebook page won’t refresh? Learn quick fixes for computer, iOS, and Android to reload your feed and see the latest posts.

2025-09-11

Automated Facebook Marketing Made Simple: How to Use Ads & Tools to Grow in 2025

Learn how automated Facebook marketing works in 2025. Discover automated ads, top tools, and why solutions like Commentify are essential for growth.

2025-09-10

Why Is Facebook Censoring You? 2025 Guide to Keep Your Content Live (Plus Tools to Avoid Removals)

As of early 2025, Statista’s latest data shows a worrying trend: 43% of global Facebook users have experienced content removal or restriction, with searches for “complaints against Facebook censorship” jumping 32% year-over-year—up from 28% in 2024.

2025-09-03

How to Manage Comments on Facebook Ads (Complete 2025 Guide)

Facebook is one of the most powerful advertising platforms ever created. With billions of active users and highly targeted ad capabilities, it gives brands and creators an unmatched opportunity to reach potential customers. But with that reach comes a challenge: the comment section.

2025-08-27

Why can’t i see comments on facebook? Reasons, Fixes, and Smart Management

Facebook isn’t just a place to post photos or updates—it’s one of the world’s largest platforms for interaction. Every day, billions of comments are exchanged, shaping discussions, influencing buying decisions, and helping people feel connected.

2025-08-20

How to Turn Off Comments on Facebook – The Complete 2025 Guide

Facebook can be a great place for conversations—until it isn’t. One day you’re sharing photos or updates, and the next, you’re wading through spam, off-topic arguments, or comments that cross the line. Whether you’re protecting your personal peace, keeping a brand page professional, or managing an active Facebook group, there will be times you want to turn off comments on Facebook or at least control who can join the conversation. This in-depth guide covers every method—from desktop to mobile, pages to groups—and explores smarter alternatives to shutting comments down completely. You’ll also learn how to use tools like Commentify to manage comments across Facebook and Instagram more efficiently.

2025-08-15

How Do You Snooze Someone on Facebook? (Complete 2025 Guide)

Wondering how Facebook’s snooze works? Discover how to snooze or unsnooze friends, why it’s useful, and whether others can see it.

2025-08-13